How have we used R to improve the implementation of clustering algorithms?

This is the fourth case study in the series, showcasing some of the ways we have been using R at Barnett Waddingham.

This case study shows how we have used R to implement clustering algorithms and demonstrates how this can be used to reduce the number of model points required in actuarial models, and hence reduce runtime.

What is clustering?

Clustering algorithms are used to identify groups of data within a larger dataset, such that data points within one group (or “cluster”) are more similar to each other than to points within another group. This is similar to ‘grouping’ policyholder data, with the difference being that clustering is a form of unsupervised learning which does not require users to define the grouping rules. It is therefore an improvement over grouping of policyholder data.

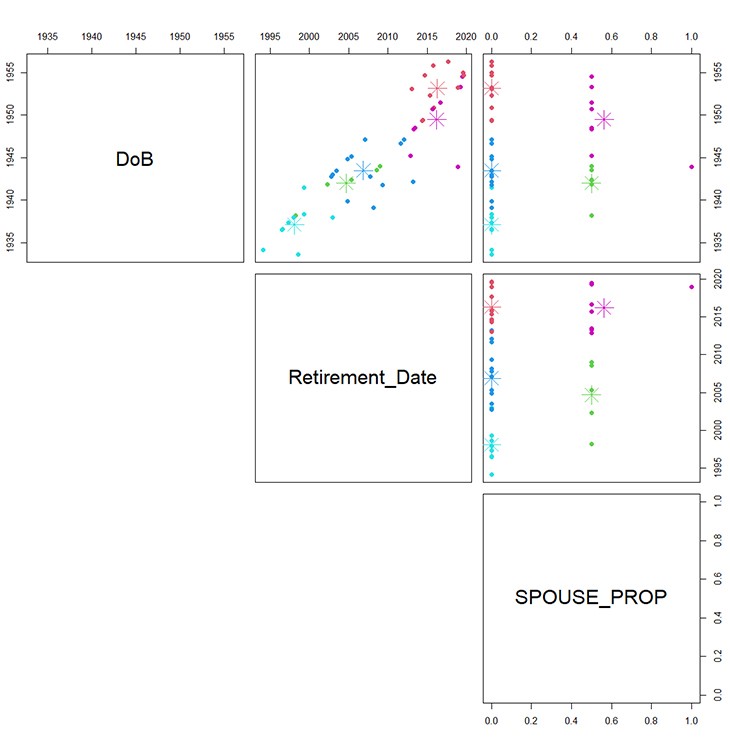

Often, a single point (or “cluster centroid”) is derived to represent all points within a cluster, generating a reduced (or “clustered”) dataset that captures the key characteristics of the original dataset but with fewer data points. This is illustrated in the scatter plot below where the colour of each point represents which cluster it has been assigned to and the centroid for each cluster is shown by the large stars.

Scatter Plot Matrix with Cluster Centers -1

What are some of the potential uses for clustering?

Clustering is usually used to classify data so it can be more easily understood and manipulated. It has been used for various applications across a wide range of industries such as market research, medical imaging, social network analysis and image processing. The key applications/uses relevant to life insurance are as follows:

The actuarial models used by life insurers can have long onerous run-times (particularly where nested stochastic models are used). This is exacerbated by the introduction of IFRS 17 where models have become complex, and the number of runs performed has increased significantly. This has resulted in a significant increase in run times and associated costs. Clustering can be applied to in-force policy data to drastically reduce the number of model points used in model runs and hence significantly reduce runtime. This is achieved by clustering model points into a reduced dataset made up of cluster centroids that capture the key characteristics of the whole dataset closely enough to reproduce the results of the full model.

Clustering analysis can be performed on large datasets as an analytical tool to give a better understanding of the underlying characteristics. Patterns/trends can be easier to identify from clustered data and outliers/anomalies easier to detect. Example uses of this analysis for life insurers include:

- Experience analysis. Clustering experience data to get a better initial understanding of features of experience data and changes to those features over time; e.g. how claims have moved over the year for a certain cluster of policyholders.

- Understanding volatility. Where the Internal Model (‘IM’) SCR is calculated using an all-risk aggregation approach, clustering can be used to group scenarios around the 1-in-200 SCR scenario allowing users to investigate proxy modelling errors and to understand the drivers behind the IM SCR.

- Anomaly detection. Clustering can be used to find anomalies in a dataset by looking at points that sit at the boundaries or are furthest away from cluster centroids.

Clustering can be used to subdivide consumers into groups with similar characteristics so that different product prices can be charged based on the characteristics/demographics of each group.

Not everyone may buy in to your vision or some may not be sure if they want to learn R; it is very different to Excel. We have actuaries who had not coded anything prior to joining us but are now proficient in coding. We encourage our team to have a growth mindset, which fits very well into one of actuaries’ natural elements.

Many actuaries are curious to learn, so we instil a mindset that any skill can be learned as long as you put your mind to it. You probably couldn’t build a cashflow model in Excel straight out of school, but you learned. You might not be able to automate some data checking in R now, but if you put the time in you will learn. Upskilling takes time, effort and a willingness to continually learn.

How have we implemented clustering algorithms?

Several approaches to clustering exist, each with their own advantages and disadvantages. The specific type of clustering approach chosen for a particular use case will depend on the nature of data. Some examples of clustering algorithms are:

- Centroid-based clustering

- Density-based clustering

- Distribution-based clustering

- Hierarchical-based clustering

Centroid-based clustering are the most known clustering algorithms and while they are sensitive to the initial parameters chosen, they are efficient and easy to implement.

K-means clustering is the most used clustering algorithm and falls under the centroid-based category. K-means has some disadvantages including lack of scalability for particularly large datasets and that the clusters can vary depending on chosen initialisation. We think these disadvantages are not material in terms of their impacts on liability results; i.e. grouping of model points. This is because liability model point files are generally manageable in terms of number of policies, and testing performed to date shows that variability of clustered liability results is low.

Other clustering algorithms considered included distribution-based Gaussian Mixture Models (‘GMMs’), Density-Based Spatial Clustering of Applications with Noise (‘DBSCAN’) and Balanced Iterative Reducing and Clustering using Hierarchies (‘BIRCH’). While some algorithms offered improvements in run-times, potential improvements in results and additional flexibility in terms of the shape of clusters, we have chosen k-means due to its ease of implementation.

We have built a tool using R to carry out a specific type of clustering algorithm called “k-means clustering.” As a proof-of-concept, we applied this tool to the in-force policy data for a book of annuity business.

The full dataset included c10,000 policies and we used the tool to cluster this dataset into a smaller number of model points. We produced several clustered datasets with various levels of clustering applied (i.e. with the number of clusters/model points used ranging from c2,000 to just 24). The clustered datasets were then run through the valuation model for comparison against the base/full model results.

How well does the clustered data replicate full model results?

All clustered datasets were able to produce results that were close to the original model results, with the highest residual being just over 2% of the modelled base reserves. As expected, the residual increases as the cluster compression ratio decreases and the number of model points reduces as more information is lost.

The model results for each level of clustering are shown in the table and graph below:

| Clustering compression ratio* | Number of model points used | Total reserves (£m) | Total Residual (clustered less base reserves in £m) | Total Residual (% of base) |

| 100% / full base model | 10,000 | 504,332,255 | 0 | 0 |

| 20% | 2,009 | 504,153,503 | 178,752 | 0.04% |

| 15% | 1,511 | 504,062,196 | 270,059 | 0.05% |

| 10% | 1,009 | 503,988,104 | 344,152 | 0.07% |

| 5% | 512 | 502,667,146 | 1,665,109 | 0.33% |

| 1% | 112 | 499,933,047 | 4,399,208 | 0.87% |

| 0.20% | 24 | 494,097,268 | 10,234,988 | 2.03% |

*Clustering compression ratio = number of clustered points / number of data points in original dataset.

Residuals and time reduction achieved using different levels of clustering

A significant reduction in model runtime was achieved from using clustered data; time elapsed for the full dataset with 10,000 policies was 4:40 minutes, and this reduced gradually for each clustered run down to 1:56 minutes for the 1% clustered run (i.e. a 59% time reduction). Although the model used was quick to run and runtime was not onerous in this case, this demonstrates that material reductions in model run-time can be achieved through clustering.

Care should be taken when selecting an appropriate clustering ratio as heavily clustered model point files can lead to different magnitudes of residuals at different percentiles of the all-risk distribution; for example, in the IM SCR biting scenario. We therefore recommend users to select an appropriate clustering compression ratio by looking at residuals under different univariate and multivariate stress against a predefined tolerance.

Can we help you?

If you or your team could benefit from our expertise in implementing clustering, please contact Abdal Chaudhry, a key contributor to this case study, or the author below. We will be happy to provide additional information and arrange a demonstration.

To stay up to date with the latest independent commentary and exclusive insights - tailored to your preference - click here.

Applications of R in insurance: Tripartite Template asset data

How have we used R to improve the way we process Tripartite Template (TPT) asset data?

Read our case studyApplications of R in insurance: demographic experience monitoring

How have we used R to improve demographic experience monitoring?

Read our case study